date:

2015

my role:

design, coding

exhibited:

World Science Festival 2016 | ITP Winter Show 2015

date:

2015

my role:

design, coding

exhibited:

World Science Festival 2016 | ITP Winter Show 2015

Dandi is a screen-based project featuring a digital dandelion that blows away, as if by the wind, in the direction of whoever has just walked in front of it.

The project is built using p5.js and the Particle systems library.

It utilizes Distance Measuring Sensors, the newPing Library, and Arduino to determine the direction of any person walking in front of the sensor box.

date:

2017

my role:

creator, designer

created for:

NYU – Master’s Degree – Thesis

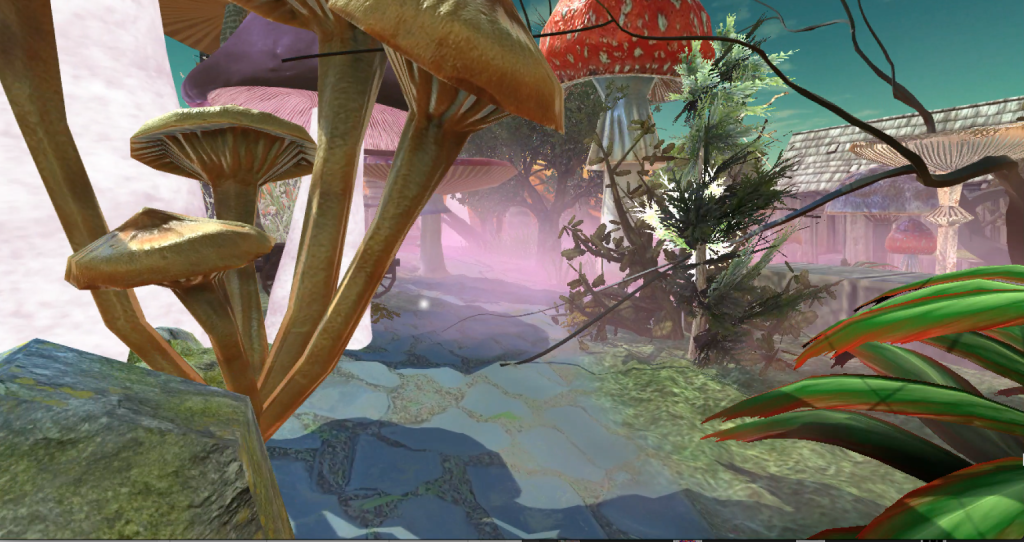

“The Dream Catcher” is a Virtual Reality (VR) experience that takes users on a journey through one of the my most peculiar dreams, where anything and everything can unfold. This immersive VR adventure presents a collection of seven vastly different scenes, each transporting users to a realm that blends the boundaries of reality and imagination.

The primary goal of the project was to unlock the enigmatic nature of dreams and showcase their captivating diversity. Users will embark on a roller-coaster of emotions, from feeling empowered and exploring dreamlike landscapes to sudden loss of control and unexpected scene transitions. The experience aimed to highlight the unpredictable nature of dreams and the vast array of emotions we can encounter in the fleeting moments of slumber.

Throughout the journey, the experience mesmerizes, shocks, irritates, scares, and surprises users, evoking a spectrum of emotions that mirror the complexity of dreams. Users may encounter moments of empowerment, only to find themselves unexpectedly deprived of sight or movement. These sudden shifts emulate the vivid and unpredictable nature of dreams, challenging users to adapt and embrace the surreal.

date:

December 2016

my role:

programmer, designer

for:

NYU ITP

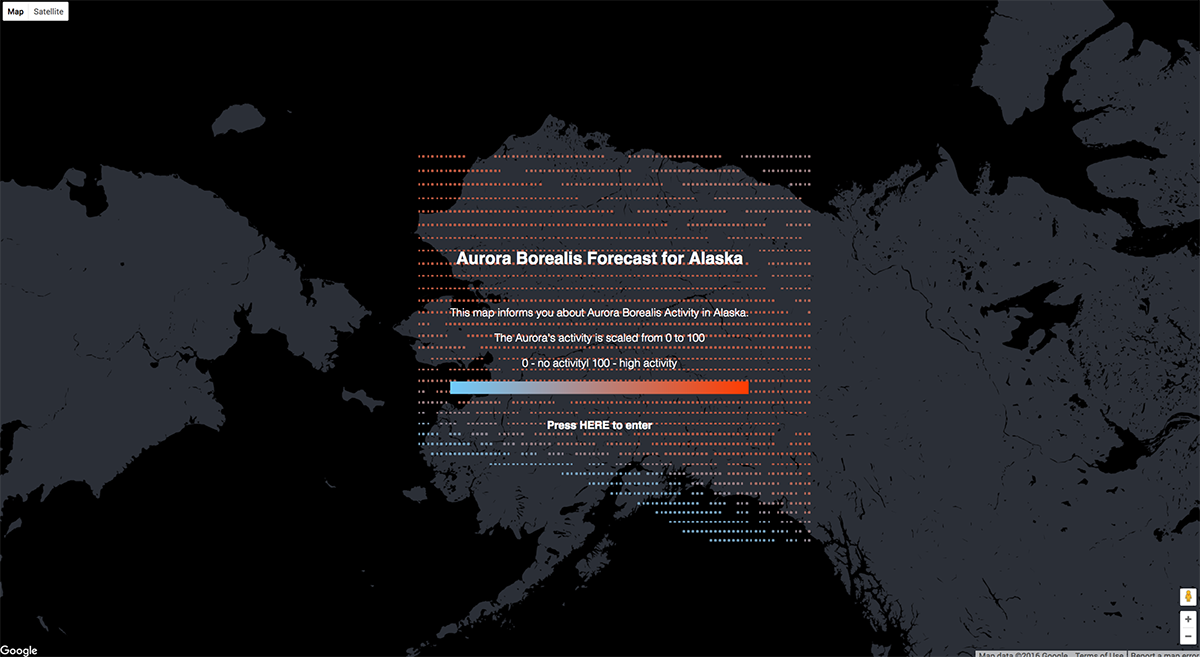

“Aurora Borealis Forecast” is an online application designed to provide users with real-time information about current Aurora Borealis activity in Alaska. This user-friendly platform is specifically tailored to cater to photographers, notifying them about upcoming Aurora activity so they can be prepared to capture the mesmerizing light show.

The application refreshes every 30 minutes, ensuring users have access to the most recent data on Aurora conditions. Leveraging the power of Google Maps API, the application seamlessly integrates tabular data from the Space Weather Prediction Center, projecting it onto an interactive map. This visual representation allows photographers to strategically plan their photography sessions, as they can see potential Aurora locations and plan their positions accordingly.

date:

December 2016

created for:

ITP Winter Show 2016

description:

Coral Reef is a game developed using Unity 3D, offering players an immersive experience in a vibrant underwater world. The game showcases a beautifully crafted coral reef environment teeming with life, featuring various marine creatures like fish, turtles, crabs, and sharks.

Incorporating my programming skills, I introduced dynamic schools of fish using flocking systems to add a touch of realism to the virtual ocean. As the project progressed, I decided to transform it into a simple collecting game.

The objective of the game is straightforward: players must collect all the white orbs scattered throughout the coral reef as quickly as possible. However, players must be cautious of the red orbs, as they add extra seconds to their total time. This adds a strategic element to the gameplay, encouraging players to optimize their collecting strategy.

Coral Reef Game from marcela on Vimeo.

date:

2016

my position:

animator, narrator, director

published:

http://prostheticknowledge.tumblr.com/

ITP Spring Show 2016

“Self Portrait” is a video game project, where I open up my inner world for users to explore and connect with the essence of who I am.

Set in a virtual landscape, the game invites users to wander through a meticulously crafted world that personifies “me” in intricate detail. Through thoughtful storytelling and artistic design, players can immerse themselves in my unique perspective, gaining insights into the layers that make up my identity.

To elevate the experience, “Self Portrait” boasts custom-made textures and 3D scans, ensuring that every visual element is a true reflection of my innermost self.

Developed and brought to life in Unity 3D, project invites users to embark on a transformative journey of self-discovery. This project brought together art, design, and interactive technology, making it an immensely rewarding and enjoyable experience to work on.

date:

May 20/21/22, 2016

January 11th, 2017

my position:

animator, narrator, director

exhibited:

– Alt-Ai exhibitition ( School for Poetic Computation)

– Pulse Art + Tech Festival

Instagram Mosaic is a series of photo mosaics automatically generated from recent Instagram photos including selected hashtags.

Software: OpenFrameworks

Video documentation:

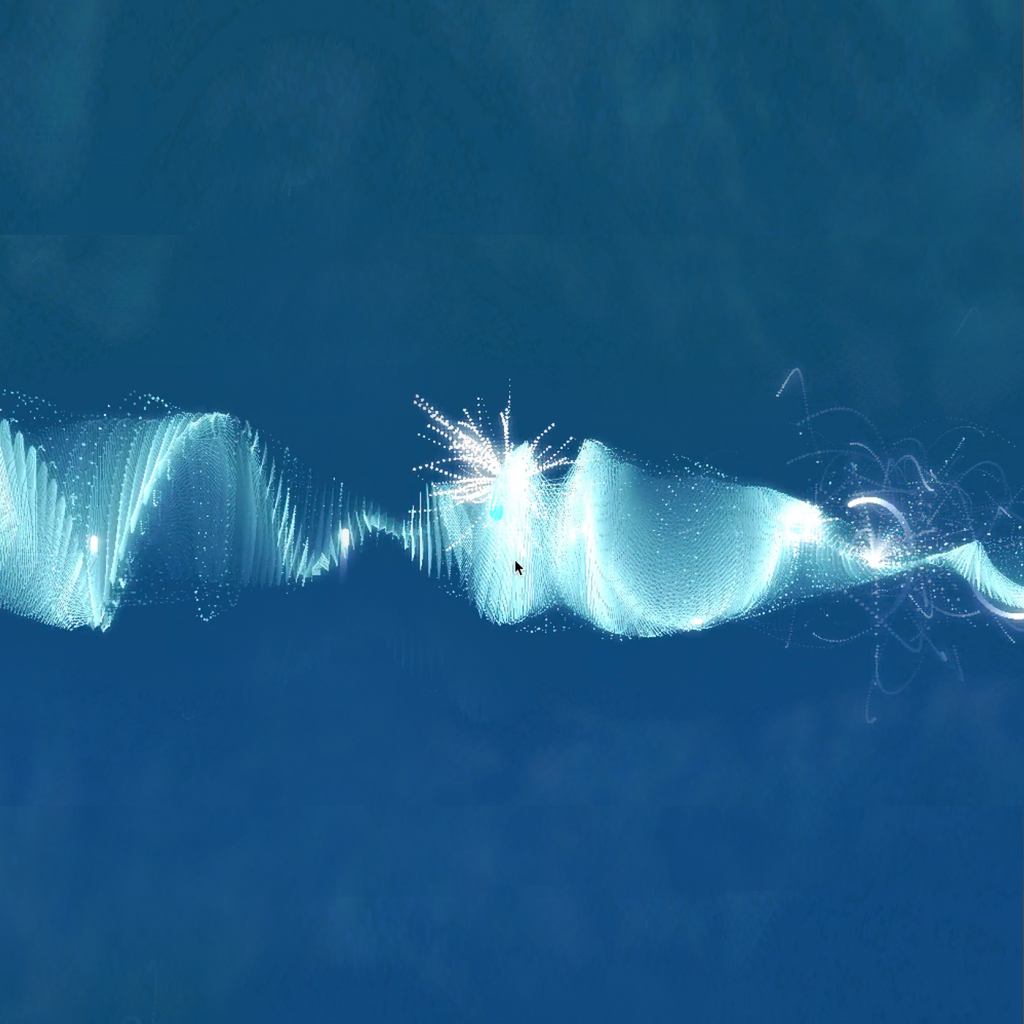

By dragging the mouse the user can observe the whole scenery from a different perspective. Moreover, the ‘Y’ position of the mouse sets the size of the main (long) wave. By pressing certain keys we’re able to add more waves and later connect them together with thin lines. The whole project focuses on creating both a visually and sonically pleasing effect. For the show I’d love to steer the effects either by motion, or sensors. I think it could look really nice displayed on a screen. Even without sound the effect the whole work might be a nice interactive background displayed on the screen.